Augmenting Airtable with Serverless

A disclaimer, this post is not about best practices or even prescribing a solution. This article will outline a unique challenge and the approach we took to get past the hurdle. So let's talk about what we faced! Over the last 3 years, we helped a client transition a lot of their internal tooling over to a tool called Airtable. So you may be wondering why Airtable, and the answer is simple: speed. I mean, let's be honest, many of us have been there, a room with executives that wanted something yesterday, and your team is tasked with building a time machine.

The Challenge

Now that you understand the motivation, let's fast forward. We are fully on board with Airtable; we have architected a core set of environments that meet the needs of specific departments, and folks are off to the races! Well, not quite. The peak hours of this client are between 2 p.m. and 5 p.m., and coincidentally, that's also when we started to notice that high-traffic environments are starting to degrade. For some context, Airtable gives you the ability to define automated workflows using a mechanism in their software called automations. The automations give the user a really easy interface with which they can define the workflow along with the conditions that will trigger it. Once those conditions are met, the automation is executed, and the user-defined workflow is run. There are some limitations to be aware of, however:

- An Airtable base is limited by the number of automations you can create.

- A single automation instance has a runtime limit of 30 seconds.

- A single automation instance can make a set number of HTTP requests.

- The concurrency of these automations is handled by Airtable behind the scenes.

- An Airtable account is limited by the number of total runs a workspace can execute.

- Automation compute shares resources with the rest of the operations occurring in a base. e.g., user actions, API calls, etc.

What We Did About It

The first lever we made use of was working directly with Airtable on possible performance enhancements we could make. We did end up getting a higher compute instance provisioned for high traffic bases. We knew that the larger instance would not be enough; we needed to be able to scale past the amount of user interaction we had now, so what was left? The key is identifying that automations share compute resources with the rest of the base, so how could we offload the CPU time? Here is what we did.

- We asked Airtable for the CPU and memory metrics of all the automations in our high traffic instances.

- We used that list to identify candidates for "promotion" out of Airtable.

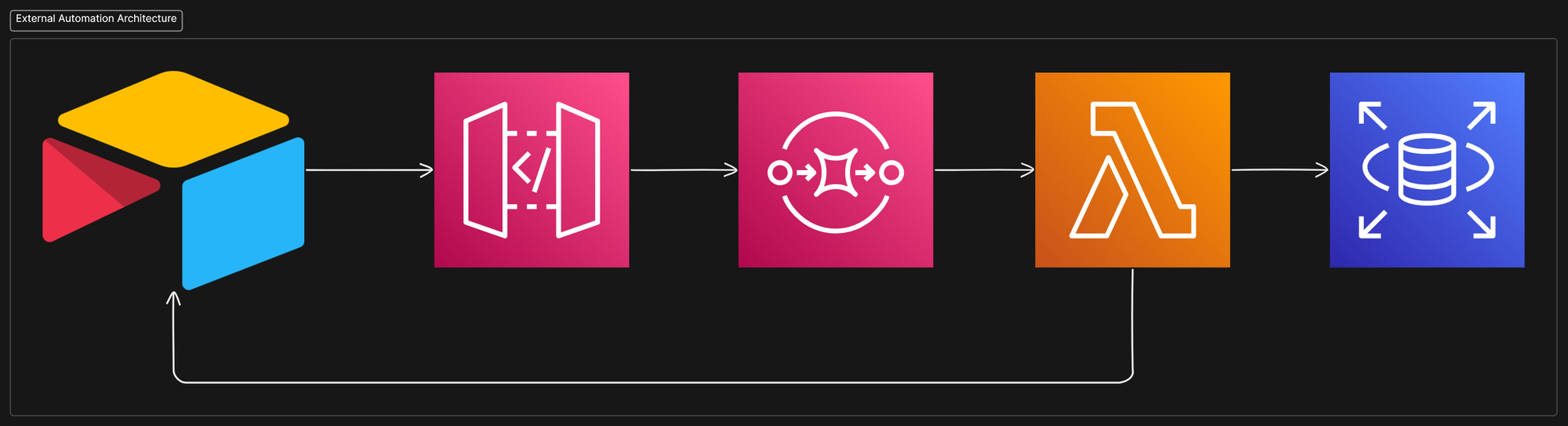

- We leveraged Airtable's webhooks to architect an "automation" solution from our end.

- In the event that the output of an automation had to interact with a base, we used Airtable's Rest API to push those results back.

Here Is What It Looked Like

We moved the compute over to a serverless function using AWS. This unlocks a few things for us:

- We moved valuable CPU load out of high-traffic Airtable bases.

- We gained some control over the concurrency of automation runs.

- A longer runtime for complex operations.

- Access to our own metrics over the lifecycle of any single run.

We saw immediate results, and our users also noticed. Hurray! Of course, while this approach did solve our immediate problems, it added a whole new level of complexity to managing our Airtable instance, so we carefully decided what should be "promoted.".

We learned a lot during this excursion, and we could always improve the solution. It shows that clever solutions exist; you just need a little know-how, and the tool you use needs to allow some room for extension. We love finding interesting solutions to tough problems, so if you are interested in partnering with us, reach out and let's chat.